Piotr Dudek, Thomas Richardson, Laurie Bose, Stephen Carey, Jianing Chen, Colin Greatwood, Yanan Liu, Walterio Mayol-Cuevas, Sensor-level computer vision with pixel processor arrays for agile robotsScience Robotics, 2022. [PDF@SR] (author's PDF is available upon request).

Walterio Mayol-Cuevas Research

Friday 30 September 2022

Sensor-level computer vision with pixel processor arrays for agile robots, Science Robotics 2022

Monday 15 August 2022

On-Sensor Binarized CNN Inference with Dynamic Model Swapping in Pixel Processor Arrays, Frontiers in Neuroscience 2022

Liu Y, Bose L, Fan R, Dudek P and Mayol-Cuevas W. On-sensor binarized CNN inference with dynamic model swapping in pixel processor arrays. Frontiers in Neuroscience. Neuromorphic Engineering. 15 August 2022. [PDF]

In this research, we explore novel ways to develop and deploy visual inference on the image plane including dynamically swapping CNN modules on-the-fly to fit larger models in a Pixel Processor Array.

Sunday 8 May 2022

Rebellion and Disobedience as Useful Tools in Human-Robot Interaction Research - The Handheld Robotics Case, RaD in AI workshop AAMAS 2022

W.W. Mayol-Cuevas Rebellion and Disobedience as Useful Tools in Human-Robot Interaction Research - The Handheld Robotics Case. CoRR abs/2205.03968. Rebellion and Disobedience in AI Workshop AAMAS (2022) [PDF]

Monday 4 October 2021

Yao Lu, Walterio W. Mayol-Cuevas: The Object at Hand: Automated Editing for Mixed Reality Video Guidance from Hand-Object Interactions. IEEE ISMAR 2021. [PDF]

Monday 29 March 2021

Look-ahead fixations during visuomotor behavior: Evidence from assembling a camping tent. Journal of Vision 2021

Brian Sullivan, Casimir J. H. Ludwig, Dima Damen, Walterio Mayol-Cuevas, Iain D. Gilchrist; Look-ahead fixations during visuomotor behavior: Evidence from assembling a camping tent. Journal of Vision 2021;21(3):13. [PDF]

Monday 1 March 2021

Weighted Node Mapping and Localisation on a Pixel Processor Array. IEEE ICRA 2021

H. Castillo Elizalde, Y. Liu, L. Bose and W. Mayol-Cuevas. Weighted Node Mapping and Localisation on a Pixel Processor Array. IEEE ICRA 2021. [PDF]

Wednesday 30 September 2020

Fully Embedding Fast Convolutional Networks on Pixel Processor Arrays. ECCV 2020

L Bose, P Dudek, J Chen, S J. Carey, W Mayol-Cuevas. Fully Embedding Fast Convolutional Networks on Pixel Processor Arrays. ECCV. 2020 [PDF]

Visual Odometry Using Pixel Processor Arrays for Unmanned Aerial Systems in GPS Denied Environments. Frontiers in Robotics and AI, 2020

A. McConville, L. Bose, R. Clarke, W. Mayol-Cuevas, J. Chen, C. Greatwood, S. Carey,P. Dudek, T. Richardson. Visual Odometry Using Pixel Processor Arrays for Unmanned Aerial Systems in GPS Denied Environments. Frontiers in Robotics and AI, Vol 7, pp126. September, 2020. [Paper]

Friday 31 July 2020

Geometric Affordance Perception: Leveraging Deep 3D Saliency With the Interaction Tensor. Frontiers in Neurorobotics 2020

E. Ruiz and W. Mayol-Cuevas, Geometric Affordance Perception: Leveraging Deep 3D Saliency With the Interaction Tensor. Frontiers in Neurorobotics, July, 2020. [Paper] [Code]

Tuesday 30 June 2020

Action Modifiers: Learning From Adverbs in Instructional Videos, CVPR 2020

Hazel Doughty, Ivan Laptev, Walterio Mayol-Cuevas, Dima Damen; Action Modifiers: Learning From Adverbs in Instructional Videos. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 868-878. 2020. [PDF]

Tuesday 29 October 2019

A camera that CNNs: Towards Embedded Neural Networks on Pixel Processor Arrays, (ICCV 2019 Oral)

We present a convolutional neural network implementation for pixel processor array (PPA) sensors. PPA hardware consists of a fine-grained array of general-purpose processing elements, each capable of light capture, data storage, program execution, and communication with neighboring elements. This allows images to be stored and manipulated directly at the point of light capture, rather than having to transfer images to external processing hardware. Our CNN approach divides this array up into 4x4 blocks of processing elements, essentially trading-off image resolution for increased local memory capacity per 4x4 "pixel". We implement parallel operations for image addition, subtraction and bit-shifting images in this 4x4 block format. Using these components we formulate how to perform ternary weight convolutions upon these images, compactly store results of such convolutions, perform max-pooling, and transfer the resulting sub-sampled data to an attached micro-controller. We train ternary weight filter CNNs for digit recognition and a simple tracking task, and demonstrate inference of these networks upon the SCAMP5 PPA system. This work represents a first step towards embedding neural network processing capability directly onto the focal plane of a sensor.

A Camera That CNNs: Towards Embedded Neural Networks on Pixel Processor Arrays, Laurie Bose, Jianing Chen, Stephen J. Carey, Piotr Dudek and Walterio Mayol-Cuevas. International Conference on Computer Vision ICCV 2019. Seoul, Korea, 2019. Arxiv [PDF].

Sunday 29 September 2019

Rebellion and Obedience: The Effects of Intention Prediction in Cooperative Handheld Robots (IROS 2019)

Within this work, we explore intention inference for user actions in the context of a handheld robot

setup. Handheld robots share the shape and properties of handheld tools while being able to process task information and aid manipulation. Here, we propose an intention prediction model to enhance cooperative task solving. The model derives intention from the user’s gaze pattern which is captured using a robot-mounted remote eye tracker. The proposed model yields real-time capabilities and reliable accuracy up to 1.5 s prior to predicted actions being executed. We assess the model in an assisted pick and place task and show how the robot’s intention obedience or rebellion affects the cooperation with the robot.

Janis Stolzenwald and Walterio Mayol-Cuevas, "Rebellion and Obedience: The Effects of Intention Prediction in Cooperative Handheld Robots", to be published at 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, 2019. [PDF]

Thursday 30 May 2019

The Pros and Cons: Rank-aware Temporal Attention for Skill Determination in Long Videos CVPR 2019

Wednesday 19 September 2018

Who's Better, Who's Best: Skill Determination in Video using Deep Ranking. (CVPR 2018 Spotlight)

CVPR Spotlight talk

Published at CVPR2018.

Here we explore a new problem for egocentric perception. The study of how to determine who is better at a task in an unsupervised manner. We use Deep methods to rank videos in both medical tasks -for which there is expert scoring for validation- and also for the more complex daily living tasks -for which the scoring is ambiguous-.

Saturday 1 September 2018

I Can See Your Aim: Estimating User Attention From Gaze For Handheld Robot Collaboration (IROS2018)

Janis Stolzenwald, Walterio W. Mayol-Cuevas. "I Can See Your Aim: Estimating User Attention From Gaze For Handheld Robot Collaboration". IROS 2018.

Also see www.handheldrobotics.org for extended information on this project line.

Tuesday 1 May 2018

Where can i do this? Geometric Affordances from a Single Example with the Interaction Tensor (ICRA2018)

IEEE ICRA2018:

Eduardo Ruiz, Walterio W. Mayol-Cuevas: Where can i do this? Geometric Affordances from a Single Example with the Interaction Tensor. ICRA 2018.

This paper introduces and evaluates a new tensor field representation to express the geometric affordance of one object over another. Evaluations also include crowdsourcing comparisons that confirm the validity of our affordance proposals, which agree on average 84% of the time with human judgments, which is 20-40% better than the baseline methods.

Tuesday 24 October 2017

Visual Odometry for Pixel Processor Arrays (ICCV2017 Spotlight)

"Visual Odometry for Pixel Processor Arrays [PDF]", Laurie Bose, Jianing Chen, Stephen J. Carey, Piotr Dudek and Walterio Mayol-Cuevas. International Conference on Computer Vision (ICCV), Venice, Italy, 2017.

ICCV2017 Spotlight Talk

We present an approach of estimating constrained egomotion on a Pixel Processor Array (PPA). These devices embed processing and data storage capability into the pixels of the image sensor, allowing for fast and low power parallel computation directly on the image plane. Rather than the standard visual pipeline whereby whole images are transferred to an external general processing unit, our approach performs all computation upon the PPA itself, with the camera’s estimated motion as the only information output. Our approach estimates 3D rotation and a 1D scale less estimate of translation. We introduce methods of image scaling, rotation and alignment which are performed solely upon the PPA itself and form the basis for conducting motion estimation. We demonstrate the algorithms on a SCAMP-5 vision chip, achieving frame rates higher than 1000Hz and at about 2W power consumption. project website www.project-agile.org

Friday 20 October 2017

Trespassing the Boundaries: Labeling Temporal Bounds for Object Interactions in Egocentric Video (ICCV2017)

Trespassing the Boundaries: Labeling Temporal Bounds for Object Interactions in Egocentric Video. D Moltisanti, M Wray, W Mayol-Cuevas, D Damen. International Conference on Computer Vision (ICCV), 2017. pdf

Monday 25 September 2017

Visual target tracking with a Pixel Processor Array (IROS2017)

"Tracking control of a UAV with a parallel visual processor", C. Greatwood, L. Bose, T. Richardson, W. Mayol-Cuevas, J. Chen, S.J. Carey and P. Dudek, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2017 [PDF].

This paper presents a vision-based control strategy for tracking a ground target using a novel vision sensor featuring a processor for each pixel element. This enables computer vision tasks to be carried out directly on the focal plane in a highly efficient manner rather than using a separate general purpose computer. The strategy enables a small, agile quadrotor Unmanned Air Vehicle (UAV) to track the target from close range using minimal computational effort and with low power consumption. The tracking algorithm exploits the parallel nature of the visual sensor, enabling high rate image processing ahead of any communication bottleneck with the UAV controller. With the vision chip carrying out the most intense visual information processing, it is computationally trivial to compute all of the controls for tracking onboard. This work is directed toward visual agile robots that are power efficient and that ferry only useful data around the information and control pathways.

Visit the project website at:

www.project-agile.org

Wednesday 8 March 2017

Automated capture and delivery of assistive task guidance with an eyewear computer: The GlaciAR system (Augmented Human 2017)

An approach that allows both automatic capture and delivery of mixed reality guidance fully onboard Google Glass.

From the paper:

Teesid Leelasawassuk, Dima Damen, Walterio Mayol-Cuevas, Automated capture and delivery of assistive task guidance with an eyewear computer: The GlaciAR system. Augmented Human 2017.

https://arxiv.org/abs/1701.02586

In this paper we describe and evaluate an assistive mixed reality system that aims to augment users in tasks by combining automated and unsupervised information collection with minimally invasive video guides. The result is a fully self-contained system that we call GlaciAR (Glass-enabled Contextual Interactions for Augmented Reality). It operates by extracting contextual interactions from observing users performing actions. GlaciAR is able to i) automatically determine moments of relevance based on a head motion attention model, ii) automatically produce video guidance information, iii) trigger these guides based on an object detection method, iv) learn without supervision from observing multiple users and v) operate fully on-board a current eyewear computer (Google Glass). We describe the components of GlaciAR together with user evaluations on three tasks. We see this work as a first step toward scaling up the notoriously difficult authoring problem in guidance systems and an exploration of enhancing user natural abilities via minimally invasive visual cues.

Wednesday 4 November 2015

Investigating Spatial Guidance for a Cooperative Handheld Robot

Improving MAV Control by Predicting Aerodynamic Effects of Obstacles

Building on our previous work, we demonstrate how it is possible to improve flight control of a MAV that experiences aerodynamic disturbances caused by objects on its path. Predictions based on low resolution depth images taken at a distance are incorporated into the flight control loop on the throttle channel as this is adjusted to target undisrupted level flight. We demonstrate that a statistically significant improvement (p << 0:001) is possible for some common obstacles such as boxes and steps, compared to using conventional feedback-only control. Our approach and results are encouraging toward more autonomous MAV exploration strategies.

- John Bartholomew, Andrew Calway, Walterio Mayol-Cuevas, Improving MAV Control by Predicting Aerodynamic Effects of Obstacles. IEEE/RSJ International Conference on Intelligent Robots and Systems. September 2015. [PDF]

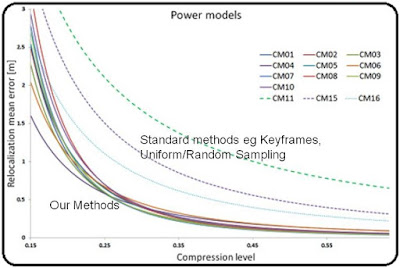

Beautiful vs Useful Maps

- Luis Contreras Toledo, Walterio Mayol-Cuevas, Trajectory-Driven Point Cloud Compression Techniques for Visual SLAM. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). October 2015. [PDF]

Tuesday 1 September 2015

Estimating Visual Attention from a Head Mounted IMU

How to obtain attention for an eyewear computer without a gaze tracker? We developed this method for Google Glass.

This paper concerns with the evaluation of methods for the estimation of both temporal and spatial visual attention using a head-worn inertial measurement unit (IMU). Aimed at tasks where there is a wearer-object interaction, we estimate the when and the where the wearer is interested in. We evaluate various methods on a new egocentric dataset from 8 volunteers and compare our results with those achievable with a commercial gaze tracker used as ground-truth. Our approach is primarily geared for sensor-minimal EyeWear computing.

- Teesid Leelasawassuk, Dima Damen, Walterio W Mayol-Cuevas, Estimating Visual Attention from a Head Mounted IMU. ISWC '15 Proceedings of the 2015 ACM International Symposium on Wearable Computers. ISBN 978-1-4503-3578-2, pp. 147–150. September 2015. PDF, 2045 Kbytes. External information

Wednesday 6 May 2015

Accurate Photometric and Geometric Error Minimisation with Inverse Depth & What to landmark?

- D. Gutiérrez-Gómez, W. Mayol-Cuevas, J.J. Guerrero. "Inverse Depth for Accurate Photometric and Geometric Error Minimisation in RGB-D Dense Visual Odometry", In IEEE International Conference on Robotics and Automation (ICRA), 2015. Nominated for Best Robotic Vision Paper Award. [pdf][video][code available]

- D. Gutiérrez-Gómez, W. Mayol-Cuevas, J.J. Guerrero. "What Should I Landmark? Entropy of Normals in Depth Juts for Place Recognition in Changing Environments Using RGB-D Data", In IEEE International Conference on Robotics and Automation (ICRA), 2015.[pdf]

Monday 1 September 2014

Cognitive Handheld Robots

We have been working for 2.5 years on prototypes (and since 2006 in the concept!) on what we think is a new extended type of robot. Handheld robots have the shape of tools and are intended to have cognition and action while cooperating with people. This video is from our first prototype back in November 2013. We are also offering details of its construction and 3D CAD models at www.handheldrobotics.org . We are currently developing a new prototype and more on this soon. Austin Gregg-Smith is sponsored by the James Dyson Foundation.

- Austin Gregg-Smith and Walterio Mayol. The Design and Evaluation of a Cooperative Handheld Robot. IEEE International Conference on Robotics and Automation (ICRA). Seattle, Washington, USA. May 25th-30th, 2015. [PDF] Nominated for Best Cognitive Robotics Paper Award.

Saturday 9 August 2014

Recognition and Reconstruction of Transparent Objects for Augmented Reality

Friday 1 August 2014

Discovering Task Relevant Objects, their Usage and Providing Video Guides from Multi-User Egocentric Video

- Damen, Dima and Leelasawassuk, Teesid and Haines, Osian and Calway, Andrew and Mayol-Cuevas, Walterio (2014). You-Do, I-Learn: Discovering Task Relevant Objects and their Modes of Interaction from Multi-User Egocentric Video. British Machine Vision Conference (BMVC), Nottingham, UK. [pdf]

- Damen, Dima and Haines, Osian and Leelasawassuk, Teesid and Calway, Andrew and Mayol-Cuevas, Walterio (2014). Multi-user egocentric Online System for Unsupervised Assistance on Object Usage. ECCV Workshop on Assistive Computer Vision and Robotics (ACVR), Zurich, Switzerland. [preprint]

Wednesday 23 April 2014

Learning to Predict Obstacle Aerodynamics from Depth Images for Micro Air Vehicles

With the current "easiness" with which 3D maps are now possible to be constructed, this work in a way aims to enhance maps with information that is beyond purely geometric. We have also closed the control loop so we correct for the deviation in anticipation, but that is for another paper.

- John Bartholomew, Andrew Calway and Walterio Mayol-Cuevas, Learning to Predict Obstacle Aerodynamics from Depth Images for Micro Air Vehicles by , IEEE ICRA 2014. [PDF]

Saturday 12 October 2013

3D from Looking: Using Wearable Gaze Tracking for Hands-Free and Feedback-Free Object Modelling

How to get a 3D model of something one looks at without any clicks or even feedback to the user?

From our paper T. Leelasawassuk and W.W. Mayol-Cuevas. 3D from Looking: Using Wearable Gaze Tracking for Hands-Free and Feedback-Free Object Modelling. ISWC 2013.

Monday 1 July 2013

Real-time 3D simultaneous localization and map-building for a dynamic walking humanoid robot

Real-time 3D simultaneous localization and map-building for a dynamic walking humanoid robot

S Yoon, S Hyung, M Lee, KS Roh, SH Ahn, A Gee, P Bunnun, A Calway, & WW Mayol-Cuevas,

Advanced Robotics, published online on May 1st, 2013.

Friday 28 June 2013

Real-Time Continuous 6D Relocalisation for Depth Cameras

In this work we present our fast (50Hz) relocalisation method based on simple visual descriptors plus a 3D geometrical test for a system performing visual 6-D relocalisation at every single frame and in real time. Continuous relocalisation is useful in re-exploration of scenes or for loop-closure in earnest. Our experiments suggest the feasibility of this novel approach that benefits from depth camera data, with a relocalisation performance of 73% while running on a single core onboard a moving platform over trajectory segments of about 120m. The system also reduces in 95% the memory footprint compared to a system using conventional SIFT-like descriptors.

- J. Martinez-Carranza, Walterio Mayol-Cuevas. Real-Time Continuous 6D Relocalisation for Depth Cameras. Workshop on Multi VIew Geometry in RObotics (MVIGRO), in conjunction with Robotics Science and Systems RSS. Berlin, Germany. June, 2013. PDF

- J. Martinez Carranza, A. Calway, W. Mayol-Cuevas, Enhancing 6D visual relocalisation with depth cameras. International Conference on Intelligent Robots and Systems IROS. November 2013.

Wednesday 1 May 2013

Mapping and Auto Retrieval for Micro Air Vehicles (MAV) using RGBD Visual Odometry

These videos show work we been doing with our partners at Blue Bear for onboard visual mapping for MAVs. These are based on visual odometry mapping for working over large areas and build maps onboard the MAV using an asus xtion-pro RGBD camera mounted on the vehicle. One of the videos show autoretrieval of the vehicle where a human pilot first flies the vehicle through the space and then the map is used for relocalisation using the map built on the way back. The other video is on a nuclear reactor installation. These are works we been doing for a while on uses of our methods for industrial inspection.

Monday 25 March 2013

Thursday 1 November 2012

Integrating 3D Object Detection, Modelling and Tracking on a Mobile Phone

- Pished Bunnun, Dima Damen, Andrew Calway, Walterio Mayol-Cuevas, Integrating 3D Object Detection, Modelling and Tracking on a Mobile Phone. International Symposium on Mixed and Augmented Reality (ISMAR). November 2012. PDF.

Sunday 7 October 2012

Predicting Micro Air Vehicle Landing Behaviour from Visual Texture

How does a UAV can decide where is best to land and what to expect if landing on a particular material? Here we develop a framework to predict the landing behaviour of a Micro Air Vehicle (MAV) from the visual appearance of the landing surface. We approach this problem by learning a mapping from visual texture observed from an onboard camera to the landing behaviour on a set of sample materials. In this case we exemplify our framework by predicting the yaw angle of the MAV after landing.

- John Bartholomew, Andrew Calway, Walterio Mayol-Cuevas, Predicting Micro Air Vehicle Landing Behaviour from Visual Texture . IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). October 2012. PDF.

Egocentric Real-time Workspace Monitoring using an RGB-D Camera

We developed an integrated system for personal workspace monitoring based around an RGB-D sensor. The approach is egocentric, facilitating full flexibility, and operates in real-time, providing object detection and recognition, and 3D trajectory estimation whilst the user undertakes tasks in the workspace. A prototype on-body system developed in the context of work-flow analysis for industrial manipulation and assembly tasks is described. We evaluated on two tasks with multiple users, and results indicate that the method is effective, giving good accuracy performance.

Monday 3 September 2012

6D RGBD relocalisation

- Andrew P. Gee, Walterio Mayol-Cuevas, 6D Relocalisation for RGBD Cameras Using Synthetic View Regression. Proceedings of the British Machine Vision Conference (BMVC). September 2012. PDF.